How important are AI data centers? In just months, Elon Musk’s xAI team converted a factory outside Memphis into a cutting-edge, 100,000-GPU center for training the Colossus supercomputer—home to the Grok chatbot.

Initially powered by temporary gas turbines (later replaced by grid power), Colossus installed its first 100,000 chips in only 19 days, drawing praise from NVIDIA CEO Jensen Huang. Today, it operates 200,000 GPUs, with plans to reach 1 million GPUs by the end of 2025. [1]

There are about 12,000 data centers throughout the world, nearly half of them in the United States. Now, more and more of these are being built or retrofitted for AI-specific workloads. Leaders include Musk’s xAI, Microsoft, Meta, Google, Amazon, OpenAI, and others.

High power is essential for such operations, and like computational electronics of all sizes heat issues need to be resolved.

GenAI

A key driver of data center growth is Generative AI (GenAI)—AI that creates text, images, audio, video, and code using deep learning. Chatbots and large language model ChatGPT are examples of GenAI, along with text-to-image models that generate images from written descriptions.

Managing all this is possible from new generations of processors, mainly GPUs. They all draw on higher levels of power and generate higher amounts of heat.

AI data centers prioritize HPC hardware: GPUs, FPGAs, ASICs, and ultra-fast networking. Compared to CPUs (150–200 W), today’s AI GPUs often run >1,000 W. . To handle massive datasets and complex computations in real-time they need significant power and cooling infrastructure.

Data Center Cooling Basics

Traditional HVAC was sufficient for older CPU-driven data centers. Today’s AI GPUs demand far more cooling, both at the chip level and facility-wide. This has propelled a need for more efficient thermal management systems at both the micro (server board and chip) and macro (server rack and facility) levels. [4]

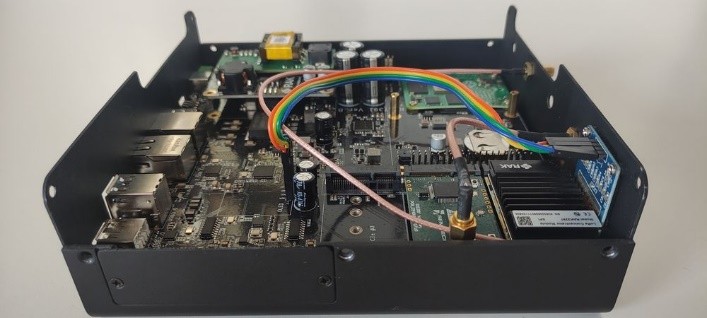

At Colossus, Supermicro 4U servers house NVIDIA Hopper GPUs cooled by:

- Cold plates

- Coolant distribution manifolds (1U between each server)

- Coolant distribution units (CDUs) with redundant pumps at each rack base [6]

Each 4U server is equipped with eight NVIDIA H100 Tensor Core GPUs. Each rack contains eight 4U servers, totaling 64 GPUs per rack.

Between every server is a 1U manifold for liquid cooling. They connect with CDUs, heat-exchanging Coolant Distribution Units at the bottom of each rack that include a redundant pumping system. The choice of coolant is determined by a range of hardware and environmental factors.

Role of Cooling Fans

Fans remain essential for DIMMs, power supplies, controllers, and NICs.

At Colossus, fans in the servers pull cooler air from the front of the rack, and exhaust the air at the rear of the server. From there, the air is pulled through rear door heat exchangers. The heat exchangers pass warm air through a liquid-cooled, finned heat exchanger/radiator, lowering its temperature before it exits the rack.

Direct-to-Chip Cooling

NVIDIA’s DGX H100 and H200 server systems feature eight GPUs and two CPUs that must run between 5°C and 30°C. An AI data center with a high rack density houses thousands of these systems performing HPC tasks at maximum load. Direct liquid cooling solutions are required.

Direct liquid cooling (cold plates contacting the GPU die) is the most effective method—outperforming air cooling by 82%. It is preferred for high-density deployments of the H100 or GH200.

Scalable Cooling Modules

Colossus represents the world’s largest liquid-cooled AI cluster, using NVIDIA + Supermicro technology. For smaller AI data centers, Cooling Distribution Modules (CDMs) provide a compact, self-contained solution.

Most AI data centers are smaller, and power and cooling needs are lower, but essential. Many heat issues can be resolved using self-contained Cooling Distribution Modules.

The compact iCDM-X cooling distribution module provides up to 1.6MW of cooling for a wide range of AI GPUs and other chips. The module measures and logs all important liquid cooling parameters. It uses using just 3kW of power, and no external coolant is required.

These modules include:

• Pumps

• Heat exchangers

• Cold plates

• Digital monitoring (temp, pressure, flow)

Their sole external component is one or more cold plates removing heat from AI chips. ATS provides an industry-leading selection of custom and standard cold plates, including the high-performing ICEcrystal series.

Cooling Edge AI and Embedded Applications

AI isn’t just for big data centers—edge AI, robotics, and embedded systems (e.g., NVIDIA Jetson Orin, AMD Kria K26) use processors running under 100 W. These are effectively cooled with heat sinks and fan sinks from suppliers like Advanced Thermal Solutions. [11]

NVIDIA also partners with Lenovo, whose 6th-gen Neptune cooling system enables full liquid cooling (fanless) across its ThinkSystem SC777 V4 servers—targeting enterprise deployments with NVIDIA Blackwell + GB200 GPUs. [12]

Benefits gained from the Neptune system include:

- Full system cooling (GPUs, CPUs, memory, I/O, storage, regulators)

- Efficient for 10-trillion-parameter models

- Improved performance, energy efficiency, and reliability

Conclusion

With surging demand, AI data centers are now a major construction focus. Historically, cooling problems are the #2 cause of data center downtime (behind power issues). With the high power needed for AI computing, these builds should carefully fit with their local communities in terms of electrical needs and sources, and water consumption. [13]

AI workloads will increase U.S. data center power demand by 165% by 2030 (Goldman Sachs), with nearly double 2022 levels (IBM/Newmark). Sustainable design and resource-conscious cooling are essential for the next wave of AI infrastructure. [14,15]

References

1. The Guardian, https://www.theguardian.com/technology/2025/apr/24/elon-musk-xai-memphis

2. Fibermall, https://www.fibermall.com/blog/gh200-nvidia.htm

3. NVIDA, https://resources.nvidia.com/en-us-grace-cpu/grace-hopper-superchip?ncid=no-ncid

4. ID Tech Ex, https://www.idtechex.com/en/research-report/thermal-management-for-data-centers-2025-2035-technologies-markets-and-opportunities/1036

5. Data Center Frontier, https://www.datacenterfrontier.com/machine-learning/article/55244139/the-colossus-ai-supercomputer-elon-musks-drive-toward-data-center-ai-technology-domination

6. Supermicro, https://learn-more.supermicro.com/data-center-stories/how-supermicro-built-the-xai-colossus-supercomputer

7. Serve The Home, https://www.servethehome.com/inside-100000-nvidia-gpu-xai-colossus-cluster-supermicro-helped-build-for-elon-musk/2/

8. Naddod, https://www.naddod.com/blog/introduction-to-nvidia-dgx-h100-h200-system

9. Flex, https://flex.com/resources/flex-and-jetcool-partner-to-develop-liquid-cooling-ready-servers-for-ai-and-high-density-workloads

10. Advanced Thermal Solutions, https://www.qats.com/Products/Liquid-Cooling/iCDM

11. Advanced Thermal Solutions, https://www.qats.com/Heat-Sinks/Device-Specific-Freescale

12. Lenovo, https://www.lenovo.com/us/en/servers-storage/neptune/?orgRef=https%253A%252F%252Fwww.google.com%252F

13. Deloitte, https://www2.deloitte.com/us/en/insights/industry/technology/technology-media-and-telecom-predictions/2025/genai-power-consumption-creates-need-for-more-sustainable-data-centers.html

14.GoldmanSachs, https://www.goldmansachs.com/insights/articles/ai-to-drive-165-increase-in-data-center-power-demand-by-2030

15. Newmark, https://www.nmrk.com/insights/market-report/2023-u-s-data-center-market-overview-market-clusters